|

|

import torch

import numpy as np

import gym

import torch.nn as nn

import matplotlib.pyplot as plt

from torch.nn import functional as F

class policynet(nn.Module):

def __init__(self,state_dim,action_dim,hidden_dim=64):

super (policynet ,self ).__init__()

self.layer1=nn.Linear(state_dim ,hidden_dim )

self.layer2 = nn.Linear (hidden_dim ,hidden_dim )

self.layer3 = nn.Linear (hidden_dim ,action_dim )

def forward(self,input):

x=F.leaky_relu(self.layer1 (input))

x=F.leaky_relu(self.layer2 (x))

x=self.layer3 (x)

mu=2.1*F.tanh(x)

return mu

class valuenet(nn.Module):

def __init__(self,state_dim,value_dim=1,hidden_dim=64):

super(valuenet, self).__init__()

self.layer1=nn.Linear (state_dim ,hidden_dim )

self.layer2=nn.Linear (hidden_dim ,hidden_dim )

self.layer3=nn.Linear (hidden_dim,value_dim )

def forward(self,input):

x=F.relu(self.layer1 (input))

x=F.relu(self.layer2 (x))

out=self.layer3 (x)

return out

class PPOagent:

def __init__(self,gama,policy_lr,critic_lr,lamda,state_dim,action_dim,epoch,device,eps=0.2):

self.gama=gama

self.policy_lr=policy_lr

self.critic_lr = critic_lr

self.lamda=lamda

self.epoch=epoch

self.eps=eps

self.device=device

self.critic=valuenet (state_dim).to(self.device)

self.actor=policynet (state_dim,action_dim).to(self.device)

self.policy_std=nn.Parameter(torch.ones(action_dim, device=self.device)*0.1)

self.actor_optim=torch.optim.Adam (list(self.actor.parameters())+[self.policy_std],lr=self.policy_lr )

self.critic_optim=torch.optim.Adam (self.critic.parameters(),lr=self.critic_lr)

def getaction(self,state):

state=torch.tensor(state,dtype=torch.float).unsqueeze(0).to(self.device) #将输入增加一个维度

with torch.no_grad():

mu = self.actor(state)

std = self.policy_std.expand_as(mu)

actor_list = torch.distributions.Normal(mu, std)

action = actor_list.sample()

action_tanh = torch.tanh(action)

scaled_action = action_tanh * 2.0 #假设动作范围是[-2, 2]

log_prob = actor_list.log_prob(action) - torch.log(1 - action_tanh.pow(2) + 1e-7)

log_prob = log_prob.sum(dim=1, keepdim=True)

return scaled_action.cpu().numpy()[0],log_prob.cpu().numpy()

def learn(self,transition):

state=np.array(transition.get('state'))

state=torch.tensor(state,dtype=torch.float ).to(self.device )

action=np.array(transition.get('action'))

action = torch.tensor(action, dtype=torch.float).to(self.device )

reward=np.array(transition.get('reward'))

reward = torch.tensor(reward, dtype=torch.float).view(-1,1).to(self.device )

next_state=np.array(transition.get('next_state'))

next_state = torch.tensor(next_state , dtype=torch.float).to(self.device )

done=transition.get('done')

done = torch.tensor(done, dtype=torch.int32).view(-1,1).to(self.device )

old_probs =transition.get('old_probs')

old_probs = torch.tensor(old_probs, dtype=torch.float).to(self.device )

#reward =(reward -reward.mean())/(reward.std()+1e-8)

td_target=reward+self.gama*self.critic(next_state)*(1-done)

td_value=self.critic(state)

td_delta=(td_target-td_value).detach().cpu().numpy()

advantage=0

advantage_list=[]

for delta in reversed(td_delta):

advantage=delta+self.gama*self.lamda*advantage

advantage_list.append(advantage)

advantage_list=np.array(advantage_list)

advantage =torch.tensor(advantage_list ,dtype=torch.float).to(self.device)

advantage =torch.flip(advantage, dims=[0])

#advantage = (advantage - advantage.mean()) / (advantage.std() + 1e-8) # 归一化

for i in range(self.epoch):

mu=self.actor(state)

std = self.policy_std.expand_as(mu)

std = torch.clamp(std, 1e-6, 10)

dist=torch.distributions.Normal(mu,std)

entropy=dist.entropy()

probs = dist.log_prob(action)

ratio=torch.exp(probs-old_probs)

sur1=torch.clamp(ratio,1-self.eps,1+self.eps)*advantage

sur2=ratio*advantage

actorloss=-torch.min(sur1,sur2).mean()-0.01*entropy.mean()

criticloss=F.mse_loss(self.critic(state),td_target.detach())

self.actor_optim.zero_grad()

self.critic_optim.zero_grad()

actorloss.backward()

criticloss.backward()

self.critic_optim.step()

self.actor_optim.step()

env=gym.make('Pendulum-v1')

gama=0.99

critic_lr=5e-3

policy_lr=1e-3

lamda=0.95

state_dim=env.observation_space.shape[0]

action_dim=env.action_space.shape[0]

epoch=10

num_episode=100

max_step=200

return_list=[]

device=torch.device("cuda" if torch.cuda.is_available() else "cpu")

agent=PPOagent (gama,policy_lr,critic_lr,lamda,state_dim ,action_dim ,epoch ,device )

for i in range(num_episode ):

state=env.reset()[0]

done=False

esp_return=0

step_count=0

transition={

'state':[],'action':[],

'reward':[],'next_state':[],

'done':[],'old_probs':[]

}

while not done:

action,old_probs=agent.getaction(state)

next_state,reward,done,_,_=env.step(action)

transition['state'].append(state)

transition['action'].append(action)

transition['reward'].append(reward)

transition['next_state'].append(next_state)

transition['done'].append(done)

transition['old_probs'].append(old_probs)

state=next_state

esp_return=esp_return+reward

step_count+=1

if step_count>=max_step:

done=True

return_list.append(esp_return )

agent.learn(transition)

if i % 1 == 0:

print(f'Episode {i}/{num_episode}, Reurn: {esp_return}')

env.close()

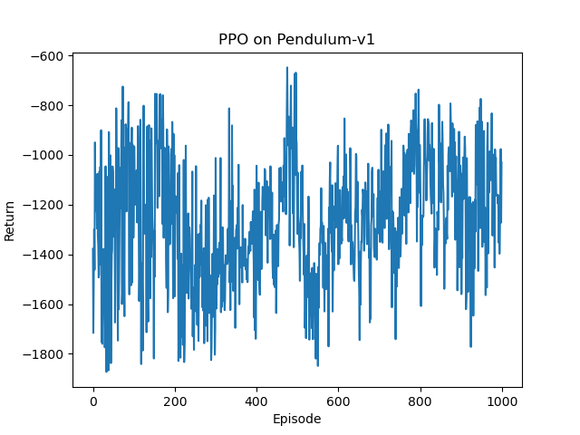

plt.figure()

plt.plot(return_list )

plt.xlabel('Episode')

plt.ylabel('Return')

plt.title('PPO on Pendulum-v1')

plt.show()

初学强化学习,但是写的第一个代码就出问题,我训练了多次都是这种图式效果,我各种排查,还是查不出来问题,请各位大佬帮我看看问题,感激不尽 |

|